Distributed

PLASMA Lab can be set to a distributed mode when running an experiment. This mode uses a second software called PLASMA Lab Service.

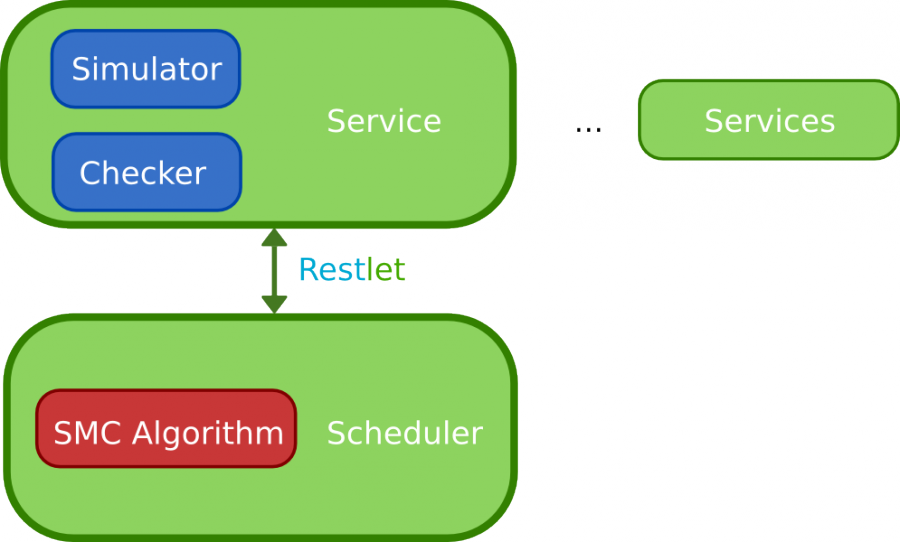

In distributed mode Simulator and Checker are deported to the Service while the Algorithm is split between a Scheduler in PLASMA Lab and a Worker in the Service. The communication between the Scheduler and the Worker use RestLet.

Sequence diagram

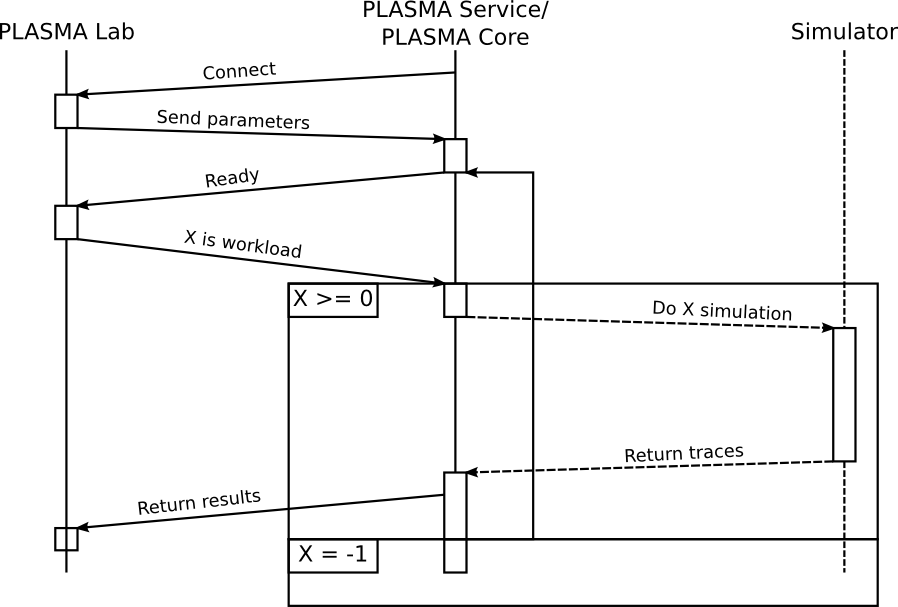

Step 0: Publishing a Restlet service

PLASMA Lab: I need help to run this experiment.

When launching a distributed experimentation, PLASMA Lab publish an interface on a Restlet Server (this server being part of PLASMA Lab code). Publishing this interface will allow other program to retrieve the interface and use its functions.

We call Client a PLASMA Service instance and the Server the PLASMA Lab instance who published the interface.

Step 1: Connecting to PLASMA Lab

PLASMA Service: I can help you.

PLASMA Lab: Ok, here are the experiment parameters.

When a client connect itself to the server, it retrieves the RMI Interface and call the connect function. This function return the experiment parameters containing the model and the list of properties to check. These parameters also contains an id to allow clients to identify themselves. Step 2: Getting a share of the work

PLASMA Service: I am ready to work.

PLASMA Lab: Run K simulation and check the properties.

The client then call the service ready function. This function will inform the server that this client is ready and will return an integer K. This integer represents the number of traces that the client has to generate. K can take several values:

- K = -1 means that the work is done, the client can disconnect.

- K = 0 tells the client to wait. This is used to wait for more client to connect before launching the simulation.

- K > 0 tells the client to generate K execution traces and check the properties on these traces.

Step 3: Returning the results

PLASMA Service: Here are my results.

The client call the work done function. This function send the results to the server. The client then return to step 2.

Scheduler

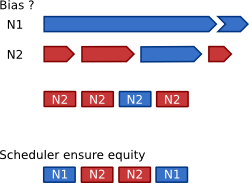

Avoiding bias

Because a negative trace -on which a property does not hold- is faster to check than a positive trace -you have to read the whole trace to see that it is a positive trace- a biais can form on a distributed Statistical Model Checking.

Scheduler

Our Scheduler keep the order of each task assigned to a client and only take results into account on this order. This ensure that a task launched at time t will be taken into accound before any task launched at a time > t.

Moreover, this scheduler ensure that a faster client will be given more work and contribute more to the overall effort. We also implemented some ideas coming from the Slow-Start algorithm used in TCP to reduce the client-server communications.

A Scheduler implements the same interface as a local Algorithm:

- fr.inria.plasmalab.algorithm.InterfaceAlgorithmScheduler

Worker

Each of our distributed implementation are based on a similar protocol described in the earlier sequence diagram. However this protocol only depends on the Scheduler-Worker implementation and could be changed depending on particular needs. The only constraints being that connect method of the Worker will be called to initiate the communication with the Scheduler.

A Worker implements:

- fr.inria.plasmalab.algorithm.InterfaceAlgorithmWorker

Order and Task

Order

Whenever a Worker tell the Scheduler it is ready to work, the Scheduler will transmit an Order (package fr.inria.plasmalab.distributed.algorithm.common). This Order indicate the number of simulation -a batch- to run and check. Other type of Order can be transmitted:

- Batch - Indicate the number of simulation to run and check

- Resend - Ask to resend previous results

- Wait - Indicate to wait for a given time

- Terminate - Indicate to disconnect and close the Service

Task Manager

Each Order (an amount of work to do) is associated to a unique identifier, a Task. A Task will then be assigned to a Worker. These association are handled by the NodeTaskManager (package fr.inria.plasmalab.distributed.algorithm.server).

As explained in the bias section, results must be taken into account in the same order that corresponding task were assigned. This is done by the TaskScheduler (package fr.inria.plasmalab.distributed.algorithm.server).

Heartbeat

In case of a Worker disconnection, or another error, the Task is reaffected by the NodeTaskManager to the next available Worker.

A Heartbeat system allows the NodeTaskManager to monitor the liveness of Worker. Using an UDP socket, Worker must regularly transmit heartbeat packet. If these transmission cease, Worker are declared disconnected. Class involved in the Heartbeat system are found in the fr.inria.plasmalab.distributed.algorithm.heartbeat package.

RestLet

Our distributed algorithms use RestLet to handle communication between Scheduler and Workers. RestLet is a Restful framework API for Java.

More details on RestLet API can be found on http://restlet.com/ or around the Web.